AI on Reverse State of the Art (Internship)

For my end of study internship i had the chance to work with a leading company in binary reverse engineering, my mission was to do review of the potential of recent “AI” boom to assist reverse engineer and, why not, allwed less skilled people to do reverse.

Context

At my school we had a shitty courses on reverse engineering, it was fully remote and it was frustrating that when we ask “how do you recognise this type of function ?” the only answer we get was “when you look at it you can see it with experience”.

So with some friend we look at the state of the art to see if somedy was working on a reverse assistant. As far as we found nothing ware really serious or working so we ask to a leading reversing company. And by luck at term they accepted that i perform an intership int this subject.

What did i do

At the start of the internship my internship master send to me some really interesting ressources so i figured out my technology watch process wasn’t efficient enoug, that’s why i setup my technology watcher.

Then, i’m not a reverse engineer, openfully i recently made an embedded key box projects, so i could try to study it in the point of view of a reverser to more understand their constraint.

Also for me compilation was just, .c -> .o -> Magical linking -> Binary, so i had to learn how it really works and the clang API really help me to do so.

Other problem, i was in the cybersecurity specialisation of my school, i only know the basics of AI so i needed to educate myself in the basics of NLP and get a better intuitive knowledge of general machine learning and deep neural network.

Building test DB

As far as i knew (and i was right), the most important stuff in machine learning is to start with an as big as possible and highest quality labelled database. I just didn’t knew what big really mean and my database wasn’t various enough (due to a biased validity checking system) but i figured it out too late.

At the end i had different representation of my DB, to each source you will have :

- A JSON describing his AST

- Program Compiled with GCC, Clang, LLVM (with 3 different parameter of obfuscation, permutated), Stripped and non stripped

- JSON of IDA extracted value with (for each compiled) :

- Call Graph

- Segmented function with raw instruction, ASM and Pseudo-C

A little of AI

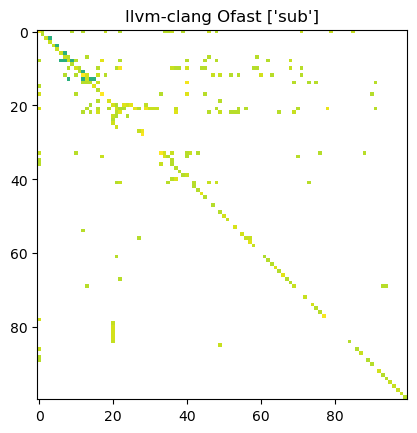

I needed to train my deep learning skills. So with my little knowledge i did some test and the more relevant one was with an LSTM studying image representation of basic block representing CFG and DFG.

With this representation i tried to performa obfuscation parameter identification, i had just good result but nothing very interesting and it was more juste to play.

Conclusion

If someone want to work in a project similar here are my advice.

- Ensure that you have a gigantic and various database

- Test the usage of Data Flow Graph, it doesn’t have been studied enough (according to me in November 2024)

- Our salvation is in Multiscale (Basic Block / Function/ Group of function / Full program) semantic embedding

The usecases i think are possible are specialised task expert code analyser. Even if Deep Learning solution was good to disassemble / decompile nobody would trust your system, it’s too critical to have precise description of the logic of the code, but you can try te leverage up the verbose level of the code with description, comment, and variable/function naming.

I tried to not be too long or boring but if you need more details on my work do not hesitate to contact me.