IR/IL

Introduction

I wanted to study more general decompilation without the usage of machine learning and i found this site and i thinked for machine learning it could be usefull to just reunite note on this différent IR / IL in the same place for future use. I won’t come into details because i’m not the best person to do so, but i’d like that a newbies reading this can have an easy overview of the differents IR structure.

What is this

IR are made to be able to have a representation of a program as close as possible from the final assembler but without the ISA characteristics. They are used for compilation, decompilation or analysis. In compilation they are used for different usecase like optimisation or obfuscation.

For all my explanations i will use this C program as an exemple :

int sum = 0;

for (int i = 0; i < 5; i++) {

if (i % 2 == 0) {

sum += i;

}

}

return 0;

Vex IR

Originally made by valgrind, a tool to test the memory safety of your program, it’s also used by Angr to perform decompilation. It have been embed in a python library, so useful for automated analysis👌.

The vex IR work a little like the SSA in the fact that for each variable there will be only one assignation. But it doesn’t use the phi function to update value and they allow to exit a basic block from multiple path.

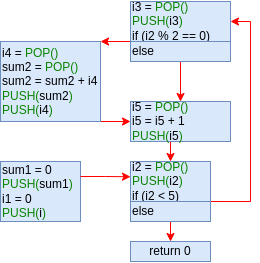

So from the code above we will get a graph like this :

So in comparison to SSA, VEX have a better ability to represent memory move so it may be a better representation to detect vulnerability (Logic because it have been made for Valgrind).

In the real world, there are many more types of data and a normalization of pointer names based on usage and each variable have an explicit size, you can check complete file here. I think this way of representing data in basic blocks may be easier to apprehend than raw ASM for NLP systems. However, it might be too close to machine logic, and standard transformers may not be the best suited for this kind of data. They might perform better on more abstract representations, like Pseudo-C.

P-Code IR

Used by Ghidra, it’s an RTL made to unified a maximum amount of ISA. It also have a python library, glory to open source !!!

So it very easy to use for first step and with our exemple we have a code like this.

python -m pypcode -b x86:LE:32:default a.out

0x0/2: JG 0x47

0: unique[a100:1] = !ZF

1: unique[a180:1] = OF == SF

2: unique[a280:1] = unique[a100:1] && unique[a180:1]

3: if (unique[a280:1]) goto ram[47:4]

It’s much more compact and we can see that it show with more details the reference to the flag of the ALU. It’s veru usefull if we want to identify a program that try call system function or more generally abuse this flags.

Because it’s normalised and much more smaller it may be a good reprensentation to perform context embedding with small BERT derivated model. But at first look for a non-expert human (like me) it’s very hard to identify what happened here so i have a little doubt for standard NLP strategies.

Angr Intermediate Language

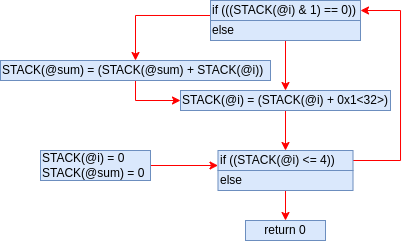

The AIL is the language used by angr to be able to perform decompilation with Angr, it look like the VEX and that’s normal, it get is info from the vex, the goal is to have a more abstract form with less machine specific artifact to be easier to translate to C.

It really look like the Vex representation but we have less details on the CPU data transfere but we still have the info of where the data comme from. Also we have operation that are slightly different but equivalent in use. You can check the original file here.

I think to have this slightly different but equivalent form could be good to have biger training database and have knowledge more based on operation semantics and less on raw recognition.

Close source, so i’m not sure

BNIL

There are 3 different level of IL for binary ninja, Low, Intermediate and High, the High Level is basically decompiled Pseudo-C.

I don’t have a binary Ninja Licence, i may update this later but for now i will only try to shorten the content of this site and can’t provide real data.

LLIL

This representation is a way to simplify the content of the basic bloc as a pseudo code, it look like this :

[0] LLIL_SET_VAR(sum, 0)

[1] LLIL_SET_VAR(i, 0)

[2] LLIL_IF ( CMP_LT( i, 5 ) ) then goto [3] else goto [8]

[3] LLIL_IF ( CMP_EQ( MOD(i,2), 0) ) then goto [4] else goto [6]

[4] LLIL_SET_VAR(sum, ADD(sum, i))

[5]

[6] LLIL_SET_VAR(i, ADD(i, 1))

[7] LLIL_GOTO [2]

[8] LLIL_RET (0)

So it’s easier to use it for further treatment.

MLIL

As far as i’m able to understand it with only the public content, it’s an higher level representation which will be able to represent more complex mechanics more to the function level. This may allow to represent function mechanics like system call but without the system specific problematic.