Source: https://arxiv.org/pdf/2412.07538v1

Date: 10 December 2024

Organisation(s): Università degli Studi di Napoli Federico II, Italy

Author(s): D. Cotroneo, F. C. Grasso, R. Natella, V. Orbinato

Note: When reading this, keep in mind that i personnaly think NN for decompilation is a bad idea so i may be biased

[Paper Short] Neural Decomp and Vuln Prediction

TLDR

It may be possible perform CWE detection with LLM derivated technology, for yes or no it’s precise to 95% and for CWE id finding it’s 80% detection. And the usage of Neural Decompilation enhanced the results compare to disassembly even if it’s not the best decompiled for reverser.

Goal

They introduce a system to do CWE Detection and Classification bases on the SoTa of classification (Transformers).

It use an LLM for decompilation which is a little tricky because the random aspect of LLM lead to a lack of trust from the user. But in this system the output is not for a human but for another model, i think it’s a good solution, so we can determine characteristic of the code that will me fact check by a human with standard decompilation methods, it’s more trustful.

How

They use various dataset for training :

| Dataset | # Samples | Compilable | Standardized Taxonomy |

|---|---|---|---|

| ReVeal | 18,169 | No | N/A |

| Devign | 26,037 | No | N/A |

| Juliet | 128,198 | Yes | Yes |

| BigVul | 264,919 | No | Only for 8,783 samples |

| DiverseVul | 330,492 | No | Only for 16,109 samples |

The only on i know is diversevul but i’m very curious about juliet.

That the data for training but we a process to use it in real world, check below.

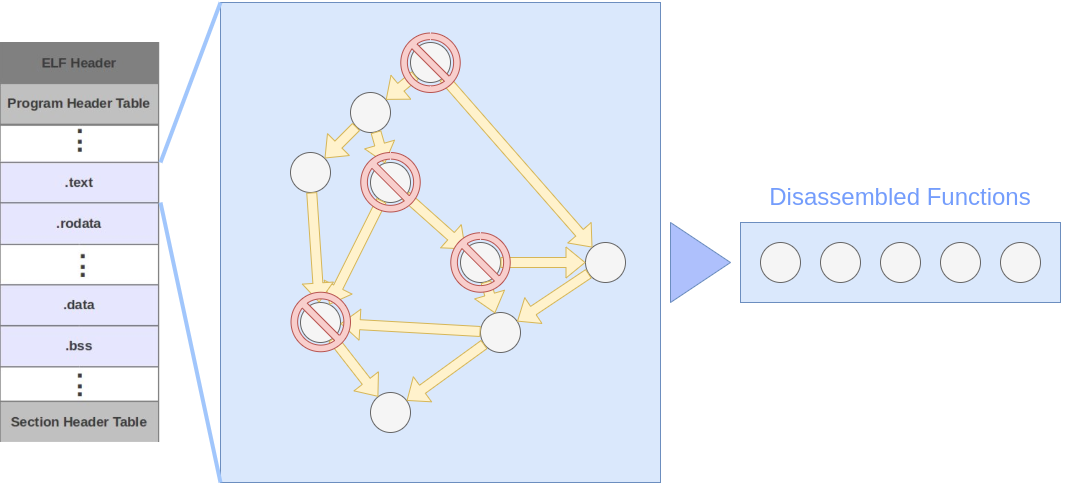

So they keep only the .text section of the data. Also they remove all the OS directive, main, lib … The goal is to only keep the function specific to this program.

This subfonction are what will be decompile and classified by the system.

Why it’s good

With this approach the system will analyse very small function which is essential for decompilation, the bigger it is the longer it will take.

It’s not really a problem to lack contextual data, the system is trained with raw function without context so it isn’t important.

How reliable

Decompilation

| Approach | Model | functions | Max length | BLEU-4 | ED | METEOR | ROUGE-L |

|---|---|---|---|---|---|---|---|

| Ghidra | IR | 1,000 | 338 | 22% | 23% | 29% | 22% |

| Katz et al. | RNN | 700,000 | 88 | - | 30% | - | - |

| Hoss et al. | fairseq | 2,000,000 | 271 | - | 54% | - | - |

| ————————— | ––––––– | ———–– | —————–– | –––– | —— | –––– | ——— |

| Proposed | CodeBERT | 75,000 | 338 | 31% | 39% | 43% | 56% |

| CodeT5+ | 75,000 | 338 | 48% | 49% | 55% | 70% | |

| fairseq | 75,000 | 338 | 58% | 59% | 64% | 77% |

WARNING : A better score doesn’t mean a better material for reverser !! It just mean less “distance” between result and source

So we can see that with standard NLP efficiency mesure system their work is able to have a “better” decompile than Ghidra and concurrency NLP methods.

That mean more that the decompile code is better to be use by another model. So that’s good for their usecase !

I’m curious of the same statistic with decompiled from Binja and IDA

Classification

| Approach | Model | Yes or no | Yes or no | exact CWE id | exact CWE id |

|---|---|---|---|---|---|

| Acc (%) | F1 (%) | Acc (%) | F1 (%) | ||

| Flawfinder | Static | 52 | 49 | 13 | 11 |

| Schaad et al. | SRNN | 88 | 88 | 77 | 79 |

| Proposed | SRNN | 88 | 88 | 72 | 70 |

| LSTM | 94 | 94 | 75 | 72 | |

| GRU | 91 | 90 | 78 | 77 | |

| CodeT5+ | 94 | 94 | 81 | 81 | |

| CodeGPT | 95 | 95 | 82 | 82 | |

| CodeBERT | 94 | 94 | 83 | 82 |

Long Story short, it work well.

The Binary detection (Yes or No) perform very well to detect potentially vulnerable function. And the exact CWE matching is promissing (not enough for fully automated analysis but a goog indicator for human evaluation).

Conclusion

I think their work show that even if we cant trust Neural Decompiled it may be usefull for higher level classification problem.

It’s like an overkill and fat embedding so may be usefull for my semantic embedding quest. It need to be tested on other classification problem like purpose or author identification.

Also having a decompilation model is a great tool to go for decompiled enhancer.

But, there is a but, i may be distracted by i didn’t find details about how the data have been separated between train and test. So we can’t be sure if the model is good on is database or if he generalised this knowledge.